The developer community has put a significant amount of effort into creating code-first solutions to GraphQL API development, especially in the Python ecosystem, rightfully attempting to ease development while combatting code duplication.

Some consider this process as a natural evolution and see code-first solutions as the logical next step. However, both solutions come with intrinsic architectural choices and lead to multiple software options. We should consider placing the role of schema in architecture as a fundamental variable for every project, depending on the specifics. Let’s review our possibilities and then we’ll make a compelling case for schema-first as a default solution.

The background: schema-first vs. code-first

If you don’t have a great deal of GraphQL experience, here is a quick recap. In GraphQL, the API is defined using its own Schema Definition Language (SDL):

type Person {

name: String

house: House

parents: [Person!]

isAlive: Boolean

}

type House {

name: String!

castle: String!

members: [Person!]

}

type Kingdom {

name: String!

ruler: Person

kings: [Person!]

}

type Query {

kingdoms: [Kingdom!]!

}It might look familiar to you if you have had some experience with protocol buffers. As we can see, it comes with its own strong static type system with all primitives, lists, and even unions and interfaces. It’s worth remembering that GraphQL is not supposed to be used to describe tree-like structures but rather enable graph nodes relations, so we are encouraged to use connections between all meaningful entities and there is no root node (this is why the GraphQL logo is not a tree).

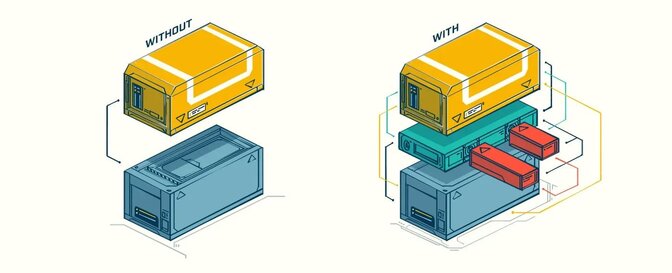

In schema-first solutions, we need to write our schema in SDL language and then we load it into our server application, parse it into an internal representation and map resolvers (functions providing us with field values) to fields. Some of this process is performed manually and we need to have all data structures present both in the SDL format and the native application language.

This is how one simple example would look in our Ariadne server:

from ariadne import ObjectType, QueryType, gql, make_executable_schema

from ariadne.asgi import GraphQL

type_defs = gql(

"""

type Person {

name: String

house: House

parents: [Person!]

isAlive: Boolean

}

type House {

name: String!

castle: String!

members: [Person!]

}

type Kingdom {

ruler: Person

kings: [Person!]

}

type Query {

kingdoms: [Kingdom!]!

}

"""

)

query = QueryType()

@query.field("kingdoms")

def resolve_kingdoms(*_):

# this is where you would want to fetch and filter data you want

house_of_stark = {"name": "Stark", "castle": "Winterfell"}

king_in_the_north = {"name": "Jon Snow", "house": house_of_stark, "isAlive": True}

return [{"ruler": king_in_the_north}]

schema = make_executable_schema(type_defs, [query])

app = GraphQL(schema)> uvicorn --reload got_server:app

INFO: Started server process [7586]

INFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)Great! In the real world, you would probably choose to keep your schema in a separate schema.graphql file or even multiple files that are joined and validated while building executable schema. We decided to enable schema modularization from early on in Ariadne but here it would only make our example harder to understand.

The superpower of GraphQL comes mainly from the ability to precisely express what data fields, and in what shape, we desire on the client side. This is a sample query on our existing server:

{

kingdoms {

name

ruler {

name

house {

name

castle

}

isAlive

}

}

}{

"data": {

"kingdoms": [

{

"name": "The North",

"ruler": {

"name": "Jon Snow",

"house": {

"name": "Stark",

"castle": "Winterfell"

},

"isAlive": true

}

}

]

}

}Spoiler-free GraphQL query

SDL came surprisingly late to the party but now it is an official core part of GraphQL specification. However, developers already created frameworks, such as Graphene, in which GraphQL schema is defined as a native language abstraction and directly connected to resolvers. When the application starts up, the framework automatically compiles schema from those abstractions. This code-first approach is implemented in libraries such as graphene (Python), graphql-ruby, juniper (Rust), Prisma nexus and sangria (Scala).

The previous example presented in Graphene would look more or less like this:

import graphene

class House(graphene.ObjectType):

name = graphene.String(required=True)

castle = graphene.String(required=True)

members = graphene.List(graphene.NonNull(lambda: Person))

class Person(graphene.ObjectType):

name = graphene.String(required=True)

house = graphene.Field(House)

parents = graphene.List(graphene.NonNull(lambda: Person))

is_alive = graphene.Boolean()

class Kingdom(graphene.ObjectType):

name = graphene.String(required=True)

castle = graphene.String(required=True)

members = graphene.List(graphene.NonNull(Person))

class Query(graphene.ObjectType):

kingdoms = graphene.NonNull(graphene.List(graphene.NonNull(Kingdom)))

def resolve_kingdoms(self, info):

house_of_stark = {"name": "Stark", "castle": "Winterfell"}

king_in_the_north = {

"name": "Jon Snow",

"house": house_of_stark,

"is_alive": True,

}

return [{"name": "The North", "ruler": king_in_the_north}]

schema = graphene.Schema(query=Query)

Why would anyone want to write SDL manually if code-first solutions are already out there? Who needs two languages instead of one? It is not as simple as it looks at first glance.

The case for schema-first development

We are not that smart

One of the main designing forces while working on new functionalities is confirmation bias and extrapolating what we know about the inner workings of the backend system into what we think will be a proper system interface. It is hard or nearly impossible for a backend developer to fully detach from preconceived notions about the implementation he is working on. As Daniel Kahneman describes in “Thinking, Fast and Slow”, our brains have two systems: one for intuitive, automatic responses and a second for deliberate, logical decisions. We heavily favor the first, as it requires less effort and improves responsiveness, but it leads us to simplifications and implicit pattern replication.

We can be blind to the obvious, and we are also blind to our blindness.

Daniel Kahneman

The intuitive and automatic system in our brains is a powerful and effective tool, but sometimes it is good to look at things from the other perspective. We often make different choices when a question is phrased differently and, similarly, we can end up with completely different code if we switch context. “We are all shaped by the tools we use,” is how Edsger W. Dijkstra phrased it.

More familiar analog: test-driven development

Why mention such bizarre trivia in the article about GraphQL? We can show its relevance in a more familiar example.

The mental process mentioned above is one reason why Test Driven Development has such a strong impact on code quality and reliability. If you are interested in good practices, you have probably at least tried using TDD for a while and, even if you finally came to the conclusion that the benefits are not worth the hassle, you most likely discovered something peculiar about your code.

Using software as a client before implementing the solution forces us to first consider use cases and user convenience; we work only on code needed to fulfill assertions made in tests, limiting the resulting complexity to an absolute minimum. It also makes the use of mockups and fakes very desirable, allowing for usage of the interface only during development and delegating external parts for later. By moving test writing one step ahead of the production code, we end up with more modular and maintainable design; it gives us an opportunity to come up with usable interface long before getting our hands dirty with technical details.

This is a great practical exercise to better understand the importance of using contracts when reasoning about software that communicates. All we need to do now is to apply this principle to a higher layer of abstraction: APIs.

Make collaboration easier with contracts

Programming is a social activity.

Robert C. Martin

So coming back to our everyday reality; treating a schema as a product of business code means that every change in the backend can cause interface changes. These are fine for backend development, since everything builds up from core business entities, but they introduce code dependency between the client and server side, making it implicitly the client’s responsibility to align with changes.

Our job as developers is to lay the foundation from which it is easy to do good and hard to make costly mistakes. The problems we discuss here are not related to the attitude or communication of developers; this kind of code dependency results in all sorts of organization artifacts, component divisions, communication patterns, CI/CD flows and planning blind-spots that lead to avoidable conflicts, bugs, and poor design.

Dependency: the problem deep down

The problems we see with GraphQL are not new or unique; they strongly resemble those encountered by designers of Object Oriented software or micro-services architects. History is full of lessons learned the hard way. This is the reason for the Dependency Inversion Principle (DIP), Inversion of Control (IoC) and Dependency Injection (DI). In our particular scenario, DIP applies the best.

Dependency Inversion

What is DIP?

1. High-level modules should not depend on low-level modules. Both should depend on abstractions. 2. Abstractions should not depend on details. Details should depend on abstractions.

The underlying idea behind this principle is to reverse runtime and code dependency in a project, giving us the freedom to use any implementation we want, as long as it is based on the right prototype. We basically introduce contract-based polymorphism into our system. Both the client and the implementation depend on a common interface, thus breaking the dependency nightmare and making it simple to check for compatibility issues.

In the OOP world, this results in Interfaces or Abstract Classes that are a base for concrete implementations; production algorithms are being built by injecting anticipated instances into places already defined by their interface in the general flow (Dependency Injection). For example, we may use a fake building block when testing or switch strategy on the fly, depending on the situation.

But I am not writing service with classes in 2019!

Rules are there for a good reason and extend far outside the OOP kingdom. Such a guiding principle (DIP) is useful wherever we encounter dependency issues and a need for polymorphic behavior; you should keep it in mind even if, like me, you are skeptical about object-oriented programming. Personally, I find SOLID rules much more suited for the component-scale design than for business logic modules.

How to apply it then to our GraphQL service architecture?

TDD might help introduce DIP into the process of writing backend units since the programmer is also later the code user, but that trick won’t work on an API as the code is usually passed over to a frontend team.

The most obvious solution is to write SDL first, then give it to both the frontend and backend side to independently implement. There are a few existing solutions to mock GraphQL SDL with fake data and make it possible to test your client without any existing backend.

Another problem lies in the timing. A new API is nonexistent until the backend work is mostly finished and the interface is generated, meaning that client-side work happens once the backend is finished.

Good implementations should minimize effort

We should also think about how failure to keep layer content on similar abstraction levels causes a great number of problems. Very low-level details are too easily introduced into schema and data formatting when using code-first solutions, which would never occur to us as a valid option if we tried to come up with the schema shape first.

It’s clear that APIs must be driven by their client use cases, ease of use, and the need to allow simultaneous frontend and backend implementation efforts. All of this comes almost for free when using schema-first GraphQL development for its contract-based approach.

The other benefits of a Schema-first approach

- Expressing GraphQL in its native language is ideal but, in the complex world of development, you might find keeping common knowledge more valuable.

- Hiding your database schema makes it less likely to be directly exposed in your client’s interface in a well-known anti-pattern. This can lead to numerous problems like naming issues and schema updates.

- Making schema an entity on its own could allow for a more flexible approach to designing your architecture and leave more wiggle room for moving stuff around late in the development cycle.

- Last but not least, it is a much better approach to integrate into test-first development and QA processes. Make schema changes, along with black box acceptance testing, an integral part of your definition of done and use them to catch mistakes early into development.

The case for Code-first development

Schema-first development comes with a certain price tag. It is definitely more verbose on the backend side, almost certainly resulting in some level of code duplication and an additional layer of abstraction to manage manually that will be responsible for field and resolver mapping. This is true for all patterns dealing with Dependency Inversion Principle and apparently does not render them useless; either way, in some edge-case circumstances this may result in a lot of additional code and the end may not be worth the means.

We also need to be aware of quiet regression. Imagine enumeration type as expanded in SDL without the backend team noticing, you might need to check for additional value in your core logic — but the type system will probably catch only disappearing elements. This issue is platform-specific and can be prevented by proper tooling.

Many projects today consist of multiple services and schema relegation, or stitching for such architecture might also be more cumbersome and involve more upfront investment. Since not all platforms will come up with convenient code-first solutions and tooling, it is also hard to predict if there will be any compatibility problems.

You should also consider a code-first approach if you need to keep the shape of the API close to the data model, when you need to constantly align trivial changes between API and implementation, and when the frontend is not a priority.

You can read more about that in Prisma's article.

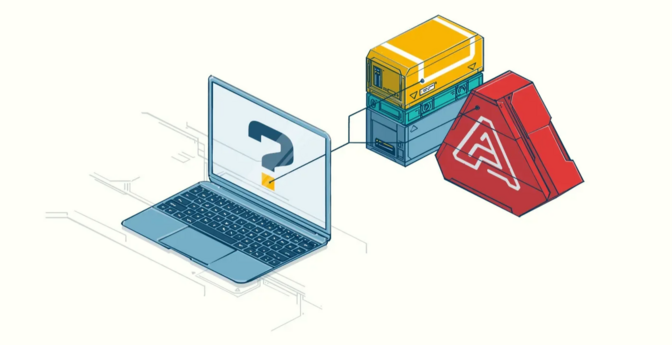

Ariadne: the beauty in simplicity

Coming from a Django background, we naturally ended up using the Graphene ecosystem for our GraphQL APIs, which is code-first and is a heavily abstract framework to work with. As we encountered problems with this solution, some of which were related to the code-first approach, we realized it wasn’t for us. Unable to find a suitable schema-first solution for Python development, we eventually decided to just write our own! Check out Ariadne, which we think makes pythonic GraphQL server development simple and fun. It is deeply rooted in our appreciation for simplicity and extensibility, giving you all the tools needed to mindfully craft your reasonable service.

The last word

All things considered, schema-first development should be considered a default choice if no additional constraints are obvious, but the whole GraphQL ecosystem is in the early stage of rapid growth and it is hard to predict what the future will bring.

This first article is a manifesto but we’ve got a lot of other interesting ideas around the Schema-first approach that we will be sharing in future articles. So, keep your eyes open for those.

Mirumee guides clients through their digital transformation by providing a wide range of services from design and architecture, through business process automation, to machine learning. We tailor services to the needs of organizations as diverse as governments and disruptive innovators on the ‘Forbes 30 Under 30’ list. Find out more by visiting our services page.